Crafting Realistic Agents in Virtual Worlds

This post is a retrospective on the tutorial series to understand Generative Agents: Interactive Simulacra of Human Behavior (Park et al. 2023)

Simulations offer a way to study complex systems with many moving parts, which may or may not exhibit well-defined behaviors. As simulated systems evolve, researchers can explore various aspects, including future predictions, system behavior descriptions, and scenario explorations like interventions and their outcomes. This potential has made simulations a point of interest across diverse domains. However, it’s important to recognize that no simulation can perfectly replicate the system it models. They are all built on assumptions that may not hold in the real world, allowing us to observe system dynamics by unfolding computational time rather than waiting in real-time.

Simulations have been developed across various domains, such as physics and biology, to investigate physical and biological systems. Similarly, in economics and social sciences, they are employed to explore complex human behavior, organizational behavior, and social dynamics.

In the tutorial series, we focus on sociological simulations, where software agents (representing humans) exhibit behaviors and personalities commonly observed in the real world. While quantifying their resemblance to real humans is challenging, the authors provide instances that closely mirror expected real-world behaviors. However, a deeper examination might reveal behaviors that seem awkward or unexpected. Nevertheless, the paper provides a solid foundation for future research in social simulations.

The primary goal is to demonstrate how LLMs can create “believable proxies” of human behavior, imbuing agents with a sense of life and realism as they react and plan autonomously. For a deeper understanding, I highly recommend reviewing the related works section of the paper.

A notable advancement is the shift away from hardcoded human behaviors in simulations. For instance, in traditional agent-based modeling, agents might follow a predetermined schedule throughout the simulation. In contrast, Simulacra allows agents the autonomy to decide their daily and hourly schedules, agendas, and goals.

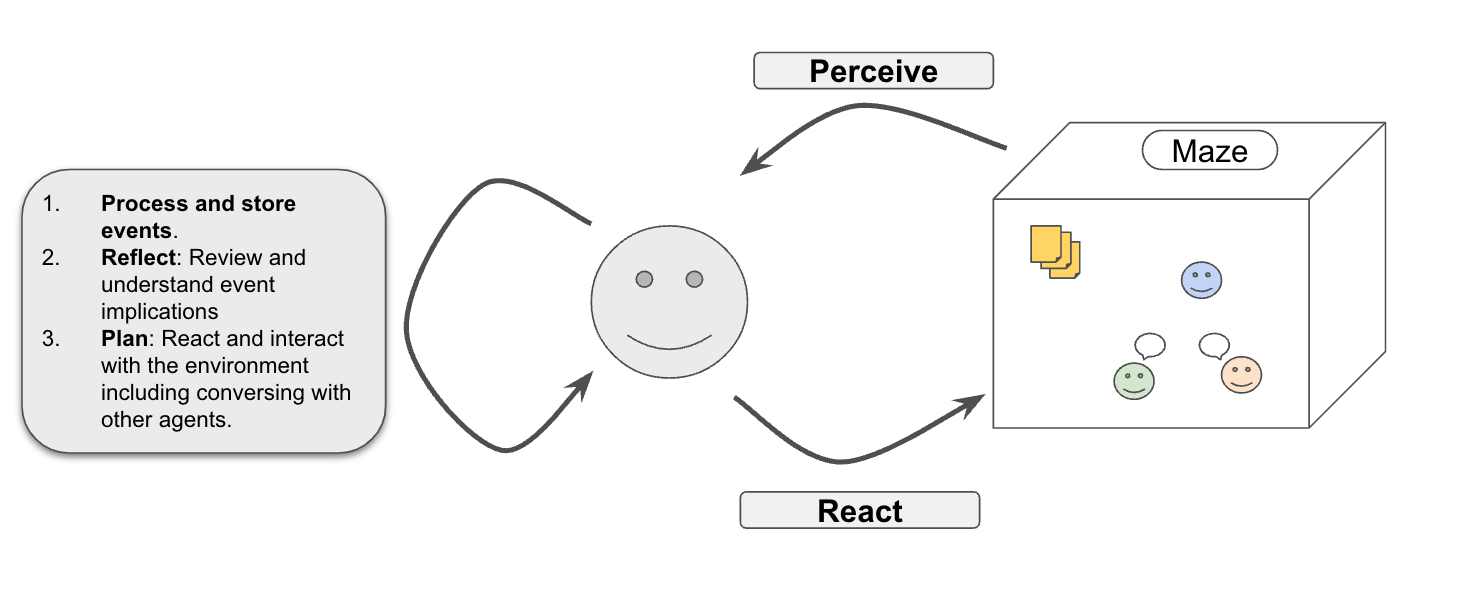

The design of the simulation is illustrated in Figure 1.

Within this environment, agents interact with various elements. The Maze provides structural components that enhance the realism of these interactions, including designated residential and working areas. These areas offer agents contextual cues about their potential actions and conversational topics appropriate for each setting.

Agents perceive their surroundings, noting objects nearby and the presence of other agents.

These observations are stored in their memory, alongside other relevant recollections, informing their subsequent actions.

Armed with these retrieved memories, agents decide on their next steps, whether it’s interacting with the environment or engaging in conversations with other agents.

This ongoing cycle yields a convincing illusion of realism within the simulations.

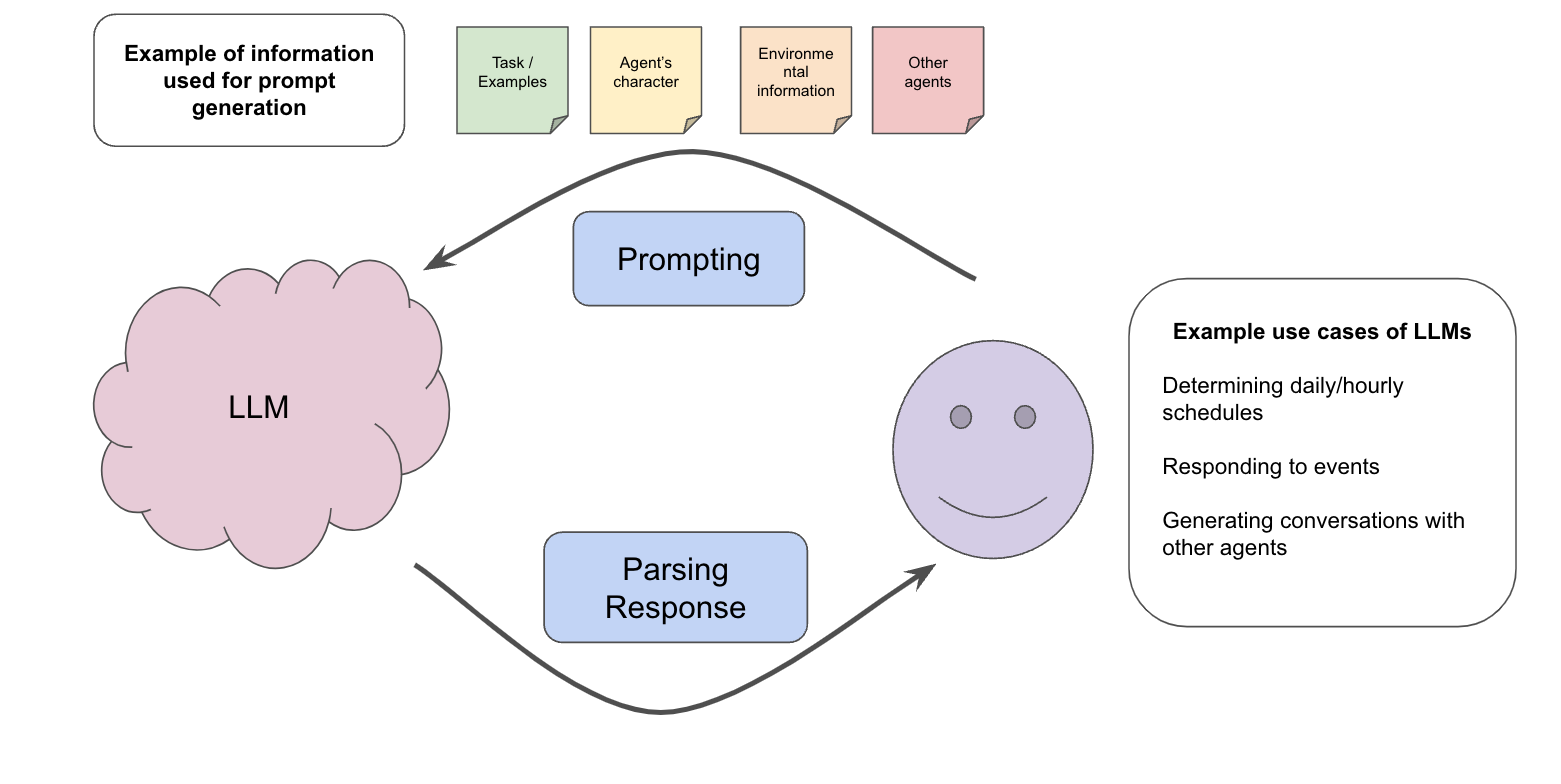

Figure 2 further clarifies the integration of Language Learning Models (LLMs) in the simulation. Agents act as repositories for memories and events, but when decisions are required, LLMs are consulted for their input. These prompts are enriched with information critical for shaping the response, including the agent’s personality, observations of the surrounding environment, and past events.

It’s crucial to understand that responses from LLMs may not always align with expectations, making the interpretation of these responses a challenge in itself. The tutorial series does not delve into the nuances of parsing LLM feedback.

The original Simulacra code is extensive and complex, presenting challenges in comprehension, especially for newcomers. To facilitate a more accessible introduction, I have streamlined the code by omitting the frontend visualization aspect. This modification results in a simpler environment (Maze) where generative agents navigate. There will be no visual feedback. Nonetheless, their movements can be verified by printing the output of their coordinates.

The primary focus of this tutorial is to construct generative agents equipped with the autonomy to make decisions and interact with others.

This tutorial is structured into a series of four notebooks, gradually building a simulation of an artificial society:

Note: LLM prompting happens only in the notebooks 1.3 and 1.4.

To streamline the learning process, certain complex components have been excluded from this tutorial, including agents’ reflection and path planning within the environment.