Task: Building understanding of time series forecasting problems, challenges, and various techniques through hands-on Python notebooks

Libraries: GluonTS, neuralforecast, Chronos, PyTorch, NumPy, Matplotlib

Series Overview: This comprehensive tutorial series covers time series forecasting from classical methods to advanced LLM-based approaches through 7 modules:

- Module 1-2 - Covers the basics of time series forecasting, including introduction to GluonTS

- Module 3 - Focuses on data and problem formulation for time series modeling

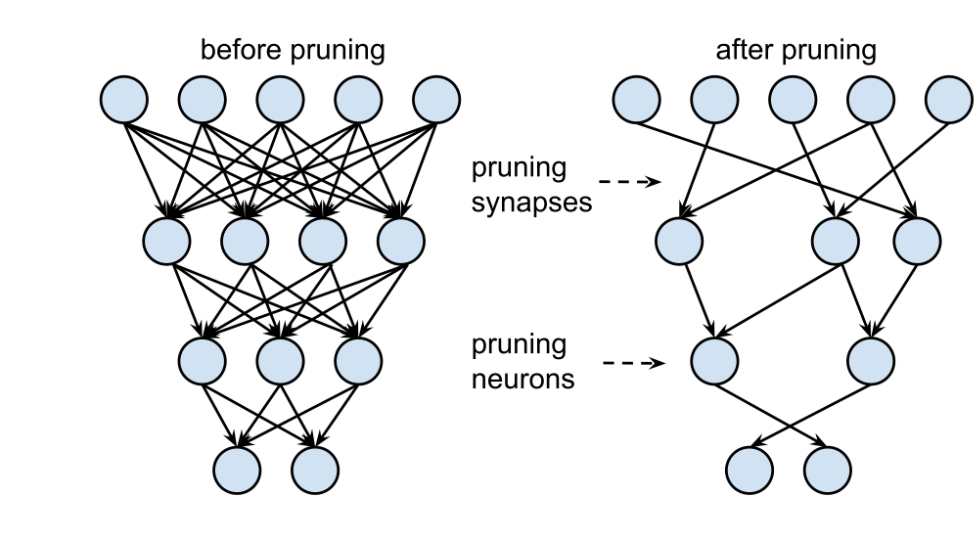

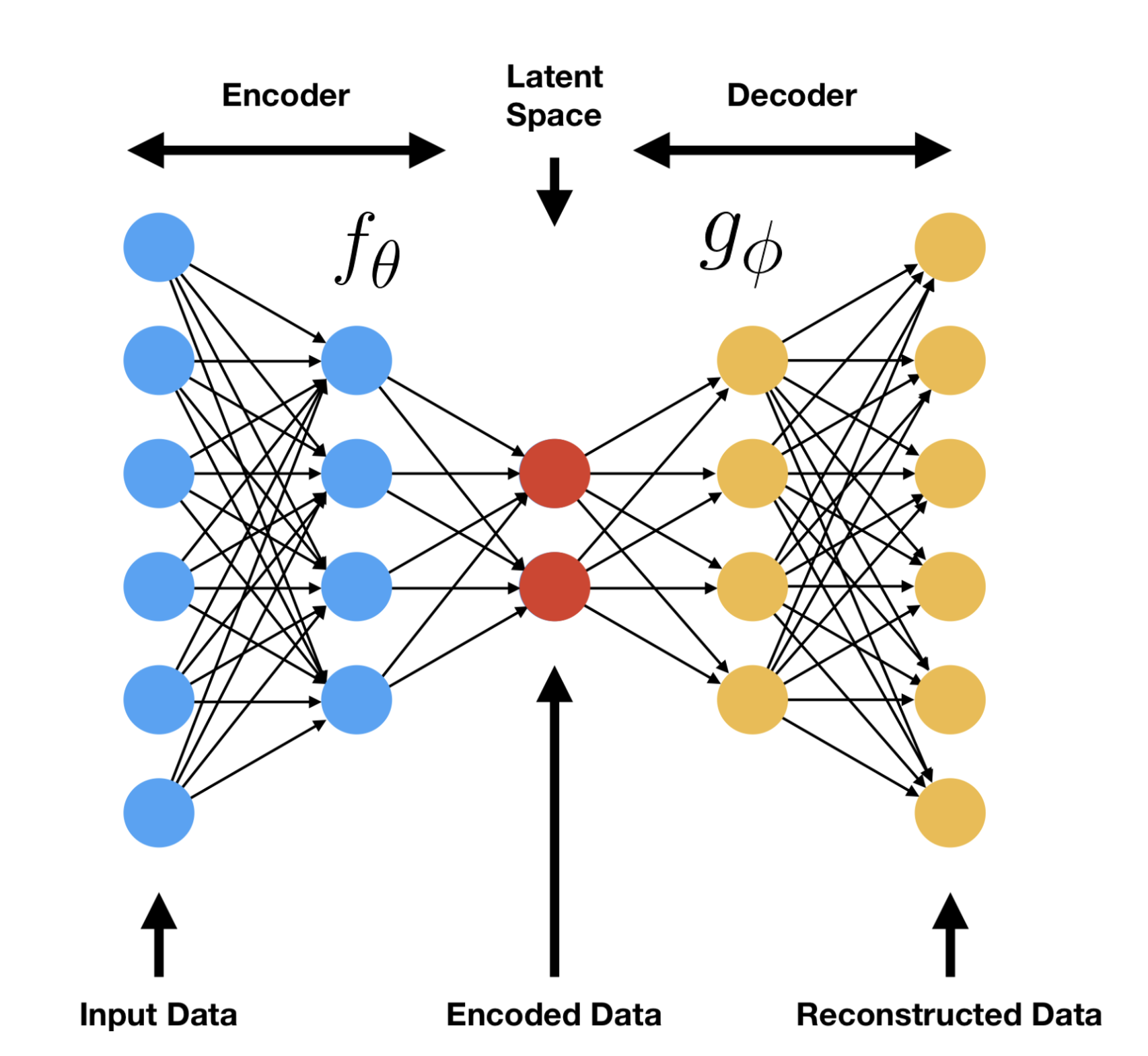

- Module 4-5 - Explores classical and neural network-based forecasting approaches

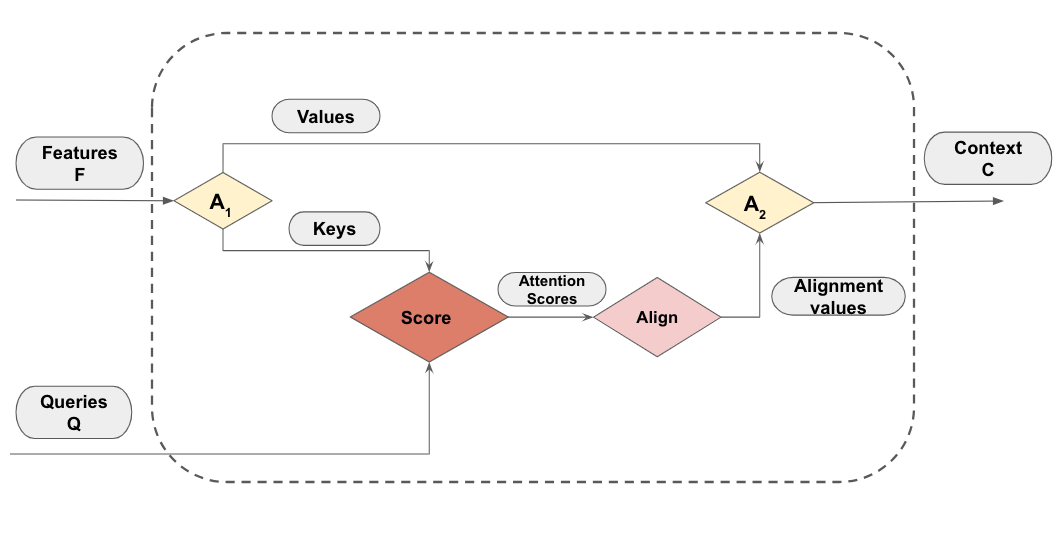

- Module 6 - Covers transformer-based architectures for time series

- Module 7 - Introduces LLM-based approaches for time series forecasting